About the Search Engine Spider Simulator

Since what search engine spiders see when they crawl the web determines how well a site ranks, it’s important to know what they’re seeing when you want to optimize a website. They don’t read pages the way humans do, and they are blind to many things on a page such as Flash and JavaScript that are designed to attract and appeal to humans.

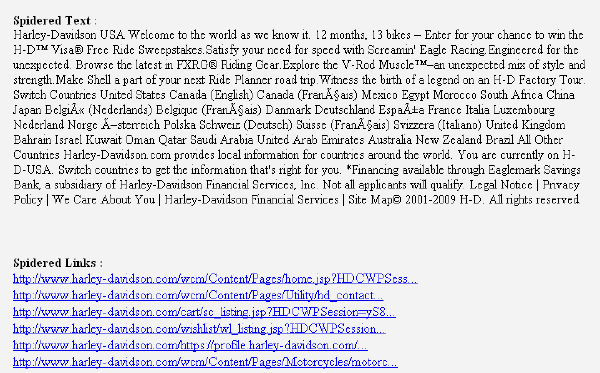

Though the algorithms of the search engines may vary, the robots work basically the same way to index sites. They are primarily text browsers, seeing nothing but text and ignoring everything else. Running a site through a simulator will show you exactly what is visible and what isn’t to the spiders.

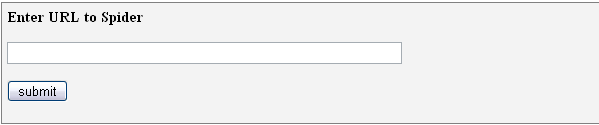

Webconfs.com offers a free Search Engine Spider Simulator tool that you can embed into your website. Enter a URL and it will display the website contents exactly the way a search engine robot will see it. It will show you where the spider sees the keywords in the text of your page.

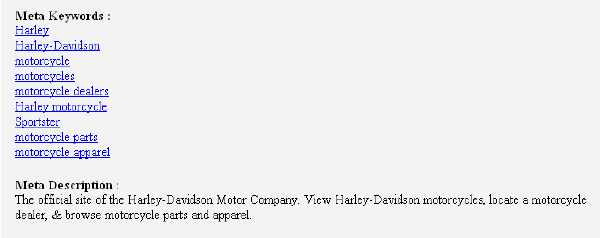

Keywords in the first paragraph have more value than keywords occurring later in the text. Though they may appear visually at the top of a page, spiders may not see it that way. For instance, the code that describes the page layout of a page with tables may come first with the actual content screens away from the top of the page resulting in the page not being recognized as keyword-rich. It also displays links that will be crawled by the search engines, meta keywords, and meta description.

A disadvantage of this particular simulator is that it does not separately list internal and external links for easier analysis. It also does not display anchor text to help optimize that important factor.