Dictate What Spiders Crawl on Your Site

You may not want search engine spiders to detect all of the files or directories on your website such as the /cgi-bin/ directory that contains your scripts, or your images directory. To hide them, you can place a robots.txt file on your website to disallow the spiders from crawling them and displaying them on search engine results.

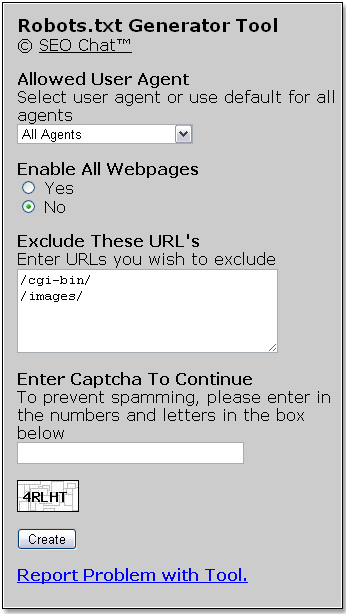

The Robots.txt Generator Tool from Developer Shed Network is a free tool that will generate a simple Robots.txt file that you can place on the root of your website, along with your home page, that will block specified files or directories from search engines. You can choose the restrictions on which pages you want to block from indexing. You can block access from all or chosen user agents and choose which URLs you wish to exclude. The tool will generate the text for the file based on those restrictions.

This is a helpful tool that allows you to keep your valuable Site Map while still blocking certain pages from search engines and public view.